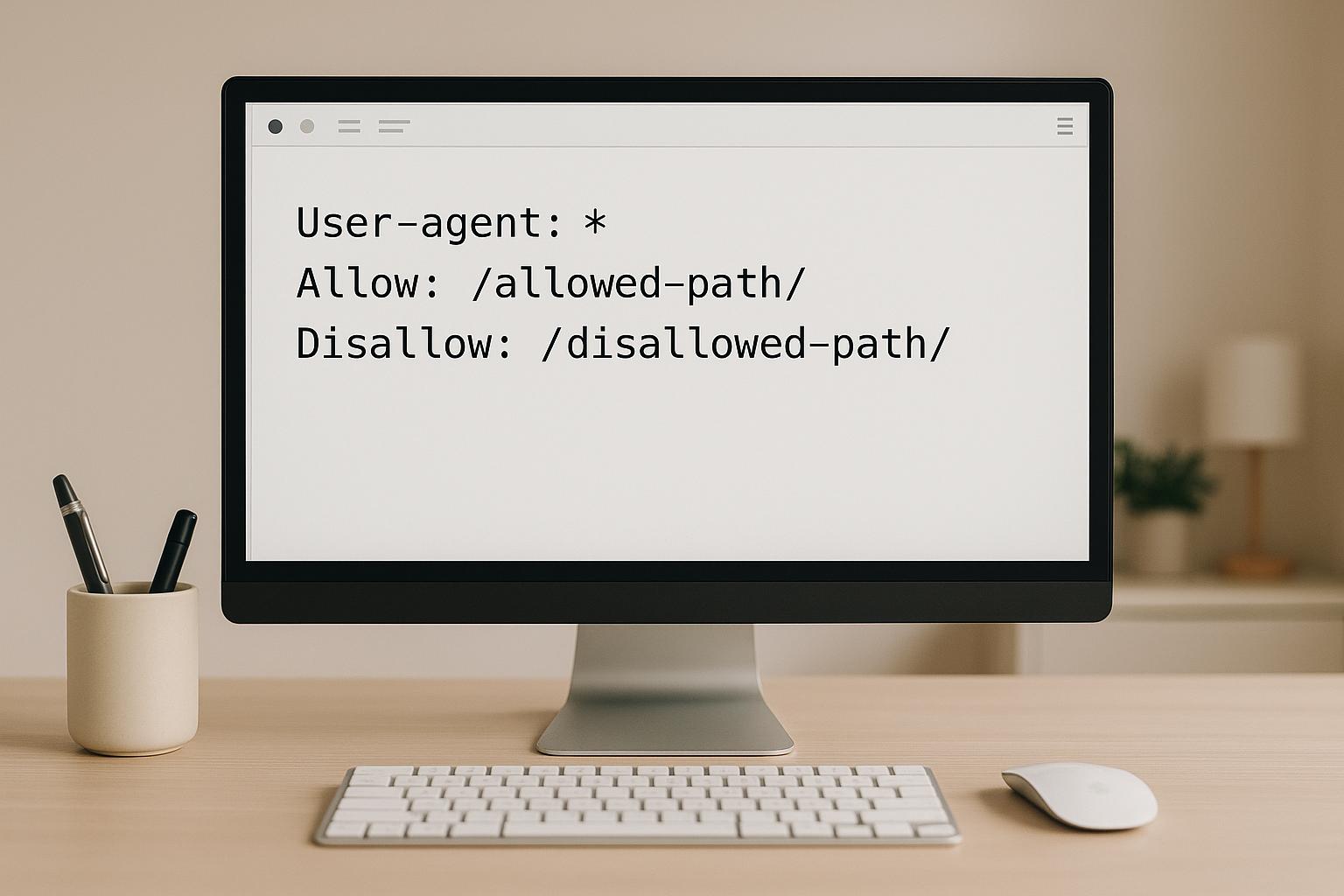

Robots.txt is a simple text file that acts as a guide for search engine crawlers, telling them which parts of your website they can or cannot access. It’s essential for managing your site’s visibility in search results, improving crawl efficiency, and protecting sensitive areas from being indexed. Here’s what you need to know:

- Allow Directive: Grants access to specific content, even within restricted areas.

- Disallow Directive: Blocks crawlers from accessing specified files, directories, or patterns.

- Sitemap: Points crawlers to your XML sitemap for better site structure understanding.

- Crawl-Delay: Controls the time between crawler requests (not respected by all search engines).

Key Takeaways:

- Crawl Budget Management: Block less important pages to ensure crawlers focus on high-value content.

- Specificity Matters: More specific rules override general ones. For example,

Allow: /products/sale/takes precedence overDisallow: /products/. - Testing is Crucial: Use tools like Google Search Console to verify your robots.txt file is working as intended.

- Limitations: Robots.txt doesn’t remove already indexed pages or block direct access by users.

By balancing Allow and Disallow directives, you can fine-tune crawler behavior, safeguard sensitive areas, and improve your site’s SEO performance.

Allow vs Disallow – Understanding Your Website’s Robots.txt

Robots.txt File Structure and Syntax

The robots.txt file needs to follow a strict structure – small mistakes can completely invalidate your instructions. Let’s break down the key components and required syntax.

Main Components of Robots.txt

A robots.txt file is made up of several directives that guide web crawlers on how to interact with your website.

- User-agent: This specifies the crawler the rule applies to, such as Googlebot or Bingbot. You can also use an asterisk (*) as a wildcard to apply the rule to all crawlers. Placement and case sensitivity matter here.

- Allow and Disallow: These directives control which parts of your site are accessible or restricted. They work with relative paths and even support wildcards for more flexibility.

- Sitemap: This points crawlers to your XML sitemap, helping them discover your site’s structure. If you have multiple sitemaps, you can include multiple entries.

- Crawl-delay: This specifies a wait time between requests. However, not all crawlers respect this directive – Google, for instance, ignores it. Instead, Google recommends adjusting crawl rates through Google Search Console.

Here’s an example of a properly formatted robots.txt file:

User-agent: * Disallow: /admin/ Disallow: /private/ Allow: /public/ User-agent: Googlebot Disallow: /temp/ Allow: /temp/images/ Sitemap: https://example.com/sitemap.xml Crawl-delay: 10 Formatting Tips:

- Each directive must be on its own line with no extra spaces around the colon.

- Use the hash symbol (#) for comments, which can make the file easier to understand.

- Separate different user-agent blocks with blank lines for clarity.

- Stick to lowercase unless your server requires otherwise.

How Search Engines Read Multiple Rules

Search engines follow specific rules when interpreting robots.txt directives, and understanding these can help you fine-tune your file.

- Specific rules override general ones: If you have a rule for "Googlebot" and another for "*", Googlebot will follow its specific instructions and ignore the general wildcard rule. This allows you to create tailored rules for different crawlers.

- Longest matching path wins: When multiple Allow or Disallow directives apply to the same URL, the one with the most specific path match takes precedence. For instance, if you have

Disallow: /products/andAllow: /products/sale/, a URL like/products/sale/shoes.htmlwill be allowed because the Allow rule is more specific. - Allow beats Disallow: If both directives have the same specificity and target the same path, the Allow rule will take precedence. This lets you block an entire directory but still permit access to specific files or subdirectories.

- Wildcards and special characters: The asterisk (*) matches any sequence of characters, while the dollar sign ($) indicates the end of a URL. These can help you craft precise rules.

When testing your robots.txt file, keep in mind that different search engines may interpret certain edge cases differently. Tools like Google’s robots.txt tester in Search Console or Bing Webmaster Tools can help you confirm how their crawlers will handle your rules.

Following these guidelines ensures your robots.txt file works as intended, supporting the broader strategies discussed in earlier sections on crawl budget and content indexing.

Using Disallow to Block Content

The Disallow directive is a handy tool for controlling which parts of your website search engine crawlers can access. When used correctly, it helps keep sensitive or irrelevant sections of your site off-limits to bots, ensuring they focus on the content you want indexed.

How Disallow Works

The Disallow directive tells search engine crawlers to avoid specific URLs or directories on your site. The syntax is simple and effective:

Disallow: /path/

This can be used to block a single file, an entire directory (e.g., /admin/), or even patterns using wildcards (e.g., /*?sessionid=). For example, you might use Disallow to block:

- Admin areas

- Development or staging environments

- Duplicate or irrelevant content

Want to block specific file types or URL parameters? Wildcards like /*.pdf can help you prevent crawlers from accessing them.

What Disallow Cannot Do

While Disallow is a powerful tool, it does have its limits. Here’s what it can’t do:

- Remove pages from search results: Disallow only stops crawlers from accessing a URL; it doesn’t remove already indexed pages. To handle that, use a

noindextag or removal tools. - Prevent direct access: Anyone with the exact URL can still visit a disallowed page in their browser. This rule only applies to search engine bots.

- Stop links from passing authority: Even if a page is disallowed, links to it from other websites can still influence your site’s SEO.

- Guarantee blocking for all crawlers: While major search engines like Google and Bing respect robots.txt, some smaller or malicious bots might ignore it entirely.

Common Disallow Mistakes to Avoid

Despite its straightforward nature, mistakes in Disallow rules can have unintended consequences. Here are some common errors and how to avoid them:

- Blocking important content by accident: For instance, using

Disallow: /blocks your entire site, whileDisallow: /products/might unintentionally block key product pages. Always double-check your paths. - Ignoring case sensitivity: If your server treats

/Products/and/products/as different directories, ensure your rules match the exact capitalization of your URLs. - Skipping subdirectory delimiters: A rule like

Disallow: /admin(without a trailing slash) might not block deeper paths like/admin/users/. UseDisallow: /admin/to cover the entire directory. - Overusing wildcards: For example,

Disallow: /_admin_could unintentionally block unrelated pages like/administrator-guide/or/badminton-tips/. - Confusing Allow and Disallow directives: These work differently, and mixing them up or placing them in the wrong order can lead to unexpected results.

To avoid these pitfalls, test your robots.txt file thoroughly. Tools like Google Search Console are excellent for checking how your rules affect crawling. Regularly review and update your file to ensure it continues to align with your site’s goals.

Up next, we’ll look at how the Allow directive lets you grant selective access, complementing the Disallow rules.

Using Allow to Grant Access

The Allow directive is like the flip side of the Disallow directive. It lets you fine-tune access for search engine crawlers, specifying which parts of your site they can explore, even within areas that are generally off-limits. Think of it as creating exceptions to the rules you’ve set with Disallow – opening up certain files or subdirectories for crawling.

How Allow Works

The syntax for Allow is straightforward and mirrors the structure of Disallow, but it does the opposite:

Allow: /path/

This comes in handy when you want to permit access to specific content within a blocked directory. For instance, you might block your entire /private/ directory but still allow crawlers to access a specific section, like /private/catalog/.

Here’s an example of how to set this up:

User-agent: * Disallow: /private/ Allow: /private/catalog/ This setup ensures that while the /private/ directory remains off-limits, the /private/catalog/ section is accessible to crawlers. It’s an effective way to maintain control while allowing exceptions where needed.

Using Allow and Disallow Together

Previously, we looked at how the Allow and Disallow directives work individually. Now, let’s dive into how combining them can give you more refined control over search engine crawlers. By using these directives together, you can create detailed rules that safeguard sensitive areas of your site while ensuring key content remains accessible.

How Both Directives Work Together

When you combine Allow and Disallow directives, you can fine-tune how crawlers interact with your site. This approach creates exceptions within blocked areas, giving you the flexibility to manage access more precisely.

For example, imagine an e-commerce site that wants to block its administrative sections but still allow search engines to index specific parts, like product catalogs:

User-agent: * Disallow: /admin/ Allow: /admin/product-catalog/ Allow: /admin/public-resources/ In this case, the /admin/ directory is blocked, but exceptions are made for /admin/product-catalog/ and /admin/public-resources/. These exceptions ensure that important content remains accessible while the rest of the admin section stays hidden.

The key here is that more specific paths take precedence over broader ones, no matter where they appear in the file. For instance, even if /admin/ is blocked, the Allow directive for /admin/product-catalog/ ensures that this specific path remains crawlable.

Another common scenario involves staging areas. You might want to block access to most of the staging environment but allow crawlers to access demo pages:

User-agent: * Disallow: /staging/ Allow: /staging/demo/ Allow: /staging/public-preview/ This setup blocks the main /staging/ directory while permitting access to specific pages you want search engines to see.

Best Practices for Writing Rules

To make the most of Allow and Disallow directives, follow these best practices:

- Start with broad blocks, then add exceptions. Begin by blocking entire directories with Disallow, and then use Allow directives to permit access to specific subdirectories or files. This makes your rules easier to follow and manage.

- Test your robots.txt file. Before deploying your file, use tools like Google Search Console’s robots.txt tester to see how Googlebot interprets your rules. Testing helps you catch potential conflicts or errors.

- Keep your rules simple. Avoid overly complicated combinations of Allow and Disallow for the same path. If things get too complex, consider reorganizing your site’s directory structure to simplify your directives.

- Be consistent with trailing slashes. For example,

/admin/blocks the entire directory, while/adminonly blocks files named "admin." This small distinction can significantly impact how your rules function. - Document your strategy. Add comments (lines starting with

#) to your robots.txt file to explain why specific rules exist. This is especially helpful when multiple team members are involved in managing SEO.

Real Examples and Use Cases

Here are some practical scenarios where combining Allow and Disallow directives can optimize crawler behavior:

| Scenario | Robots.txt Rules | Outcome | Best For |

|---|---|---|---|

| Block admin, allow public tools | Disallow: /admin/ Allow: /admin/tools/public/ |

Admin area blocked except for public tools section | SaaS platforms with public utilities |

| Block user accounts, allow profiles | Disallow: /users/ Allow: /users/profiles/ |

Private user data blocked, public profiles crawlable | Social networks, professional platforms |

| Block development, allow documentation | Disallow: /dev/ Allow: /dev/docs/ Allow: /dev/api-reference/ |

Development files blocked except for public documentation | Software companies, API providers |

| Block checkout, allow product pages | Disallow: /checkout/ Allow: /checkout/success/thank-you/ |

Payment process blocked, thank-you page accessible | E-commerce sites wanting to track conversions |

By thoughtfully combining Allow and Disallow rules, you can strike a balance between protecting sensitive areas and ensuring important pages remain visible to search engines. This approach not only helps safeguard your site’s content but also supports its SEO goals.

As you implement these combinations, keep a close eye on your search console data. Overly restrictive rules can sometimes block pages you want indexed, so regular monitoring is essential to catch and fix any issues early.

Key Points for Robots.txt Success

Getting robots.txt right comes down to effectively using Allow and Disallow directives to guide crawlers. The goal is to block access to unnecessary content while ensuring search engines focus on your most valuable pages. A well-thought-out robots.txt file not only improves crawl efficiency but also helps search engines use their crawl budget wisely, which can lead to better indexing and overall performance.

The best approach? Start with broad blocks and then add specific exceptions for areas that need special attention. This method keeps your rules manageable and minimizes the risk of accidentally blocking crucial content.

Tips for Small Business Owners

For small business owners, applying these principles can make a big difference. Here are a few practical tips:

- Review and test your robots.txt regularly: Make it a habit to check your file at least once a quarter. As your site grows and changes, your robots.txt should adapt too. Use Google Search Console’s robots.txt tester to ensure your directives are working as intended before making updates live.

- Keep an eye on search console data: Regularly monitor for crawl errors or sudden drops in indexed pages. These can be signs that your robots.txt rules are too restrictive. Set up alerts in Google Search Console to catch issues early, making them easier to fix.

- Document changes with comments: Always include notes in your robots.txt file about what changes were made, when, and why. This is especially helpful if multiple people manage your site or if you need to troubleshoot down the road.

How SearchX Can Help

SearchX offers a hands-on approach to robots.txt optimization as part of their technical SEO services. Their team carefully reviews your current setup, looking for ways to enhance crawl efficiency while safeguarding sensitive areas of your site.

Through detailed audits, they develop tailored robots.txt strategies that align with your website’s unique structure and business goals. Whether you’re adding new content, reorganizing pages, or launching new features, SearchX ensures your robots.txt file evolves with your site.

Their team handles the technical heavy lifting, so you can focus on growing your business. With SearchX, you get peace of mind knowing your crawl directives are always working to support your SEO strategy and drive better search engine performance.

FAQs

How do I make sure my robots.txt file blocks sensitive content without affecting important pages?

To make sure your robots.txt file effectively blocks sensitive content while leaving key pages accessible, use clear and specific directives. For instance, apply Disallow to restrict access to certain areas and Allow to keep essential pages visible. Steer clear of overly broad rules that could accidentally block important content.

Regularly test your robots.txt file using tools like Google Search Console or trusted validators to ensure everything is functioning as intended. Additionally, keep an eye on your site’s crawl logs to confirm search engines are reaching the right pages while avoiding restricted ones. This hands-on approach helps maintain both your site’s security and its SEO performance.

What are the most common mistakes to avoid when using Allow and Disallow directives in a robots.txt file?

When using Allow and Disallow directives in a robots.txt file, a few common missteps can create unexpected challenges for your site’s SEO. Here are some pitfalls to be aware of:

- Order matters: If you place a

Disallowdirective before anAllowfor the same path, search engines might get confused, leading to unpredictable crawling behavior. Make sure the sequence reflects your intended rules. - Syntax slip-ups: Small errors like typos, missing colons, or incorrect formatting can cause search engines to misread or completely ignore your directives. Always double-check the syntax to avoid trouble.

- Rules that are too restrictive: Blocking too many paths can prevent search engines from accessing essential content, which can hurt your site’s visibility in search results.

To steer clear of these problems, use tools like Google’s robots.txt Tester to thoroughly check your file. Make sure your directives are not only error-free but also align with your overall SEO goals.

How do specific rules in a robots.txt file influence how search engines crawl a website?

The level of detail in your robots.txt file is crucial because it directly influences how search engine crawlers, such as Google’s, interact with your site. These crawlers follow a hierarchy where more specific rules take priority over broader ones, allowing you to fine-tune which parts of your site are accessible or restricted.

For instance, if you set a rule targeting a specific folder or file, it will override a general rule meant for the entire site. This precision is key to controlling your site’s visibility in search results and aligning your crawling preferences with your overall SEO goals.